The Future of Conversational AI for Organisations

I've been talking quite a bit lately about approaches that can be used to provide safe conversational AI interfaces by constraining LLMs agency to act only within limits defined by algorithms. It has started quite a few interesting real world conversations with other people who have been thinking about this for a while that I now feel able to distil into something coherent.

This approach is often described as a "hybrid" where both AI and algorithms play a part in defining the actions. Whilst that may be the bottom up way we architect systems, it is much more fundamental principle than that.

First generation conversational interfaces were more like code. Scripted systems that fit the dialogue for one job to a strict transaction flow. A bit of AI to perform language based fuzzy intent matching, but otherwise fully scripted deterministic conversation trees.

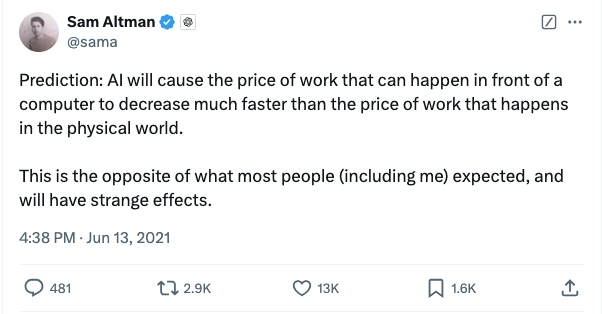

LLM powered conversational AI is different and will join human powered interfaces as the flexible bridge between an organisational business model and the messy, lossy, incoherent, badly thought out world that our customers live in and communicate from.

In any organisation of a significant size, we are already used to constraining human agents to limit damaging mistakes and focus them on actions which are consistent with our business models. We give them operating policies, rules, and constrain their actions through systems that only allow certain defined outcomes from any given starting point.

We should treat LLMs in exactly the same way. Mature, data driven organisations already define their core business model in terms of things that are either code, or pretty close to code. The key to safely unlocking benefits for these organisations will be around extending the internal APIs on their algorithms and data to drive and constrain conversational interfaces to support these well developed definitions of their processes.

For other organisations that rely solely on human alignment and agency to define and deliver their products and services, the value is not as obvious. These are not in any way second class organisations, indeed smart, aligned humans, operating with maximum agency and empathy deliver massive value in sectors like hospitality and other personalised services. They just won't be able to use AI as their core interface to clients in a safe way. This is no bad thing as long as it is acknowledged and accepted. I suspect there will be a few disasters and negative case studies before we do acknowledge this principle though.

So that is my thesis; stop thinking of LLMs as computer technology to be given absolute agency, then criticised for being flawed or dangerous. Instead think of it as an intrinsically non-deterministic translation layer which sits between the messy world humans live in and your rules based business model.

Just like with human employees, as long as business rules drive the agent and constrain its actions to align it with all of the detail of your operating principles, this will mostly work very well. It will however occasionally generate problems that need to be remediated. The key will be in adapting the way the algorithm constrains the AI to reduce severity in the first place and prevent repetition in future.

Businesses which already know precisely and algorithmically what they do, and drive conversational AI to align with this will be able to exploit the technology to scale. Those that can't will probably need to either figure out how to describe their business processes more precisely, or opt to differentiate themselves by acknowledging with no shame that they are powered by humans not algorithms!

Thoughts? Comment here...