AI and the value of knowledge work

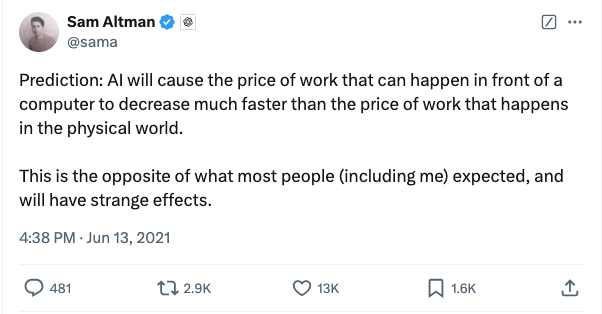

This tweet was probably pretty controversial back in mid 2021 but, with suitable qualification, the number of informed people that would now argue with it must be pretty small. I've been thinking quite a lot lately about how a society like the UK will probably deal with this and the implications for my own actions.

Putting this trend into context, in 1984, the year Margaret Thatcher's fight to the death with UK coal workers started, there were 139,000 people directly and indirectly employed in the coal industry. Some parts of the country still have social and economic scars today. Currently 3.16 million people earn a living from admin and secretarial roles, another 3.1 million put a roof over the heads of their families as managers and directors. We have 465,000 software developers. Even if your personal view is "good riddance", if only a small fraction of these jobs disappear in a relatively short period of time it will have profound personal impacts that our social security system will be called upon to mitigate and measurable social ones at scale.

This is even more alarming if you dig into job roles by area in datasets like this one and realise that areas that were hammered by de-industrialisation in the last generation, now have large numbers of lower value knowledge work jobs like call centre workers who will be in the first wave of AI disruptions!

There have been similar social revolutions in the last 60 years or so which may give us some indication of how our systems will absorb the profound change. In the west these include de-industrialisation and financial market deregulation with consequent data driven financialisation of most aspects of the people's daily lives.

It seems like a pretty good guess that we will just go with the economic flow and largely let it happen without any effective management or social consensus. There aren't really any other likely outcomes. Smart, already high agency actors, who see the curve will position themselves to gain by it. Most of the rest of society (including legislators) will be like the boiled frog. Actually a lamped pheasant is a better analogy for governments. It won't be directly catastrophic, nobody will get boiled alive, not that many people will even die. We will however only realise the full implications once the AI revolution is a matter of slow historical fact because of a million process optimisations and piecemeal redundancies.

What concerns me is that our social and political systems are already stressed because we have have never developed honest social consensus about the effects of prior waves of deindustrialisation and financialisation which happened decades ago. A substantial chunk of our population still think (with rational cause) that these were a bad thing. A slightly bigger chunk is reasonably happy with the access to nicer things more cheaply that this has created for them. I think we are more divided, and more susceptible to divisive messages, as a result of that process.

My own assessment of P(doom) from a runaway AI usurping control and enslaving or extinguishing humanity is in what I think is a rationally low <1% range. Using a wider definition of local P(bad_things) that includes mass unemployment escalating to destructive social unrest and terminal destabilisation of western political systems, it has to be much higher. Probably well into double figures depending only on an assessment of whether the wisdom of crowds of fellow travellers will save us. More tangibly, the destabilising effect of early AI enablement of bad actors propagating messages exploiting division is now measurable. Given the gift of better AI personalisation tools and a new, rich pool of disaffected and underemployed knowledge workers these techniques will only gain more leverage.

As a politically naive practitioner, I don't really know what to do about it. Stopping developing is pointless, counter productive even. The trajectory is inevitable by the mere fact that the technology exists. Compelling economic benefits are foreseen by financial markets. Governments see big execution benefits and are in any case themselves largely slaves to the wisdom of those markets. There is no indication that AI development will stop, or even decelerate in any measurable way in response to social concerns. History teaches us that Ned Ludd achieved nothing in the grand scheme of things. Titus Salt maybe...

Unlike previous waves, I sense there is an imperative to distribute the value as a social premium much more obviously and quickly to keep society relatively intact.

What's your take on how we individually do this - is it something that implementers optimising for good outcomes can influence?

First serious post here... What does the AI and the value of knowledge work, what can we expect government to do, and how should we respond individually... www.pickering.org/ai-and-the-p... TL;DR Don't worry about P(doom), P(bad_things) is much more likely, and possibly influenceable.

— Rob Pickering (@rob.pickering.org) 27 December 2024 at 12:34

[image or embed]